Embodying Control in 3D Space with 7 dimensions of control

Puppet

Guided by

Prof. Venkatesh R.

Prof. Jayesh Pillai

Collaborators

Utkarsha Kate

Saie G

Abhishek Benny

Interaction Design

Tangible User Interfaces

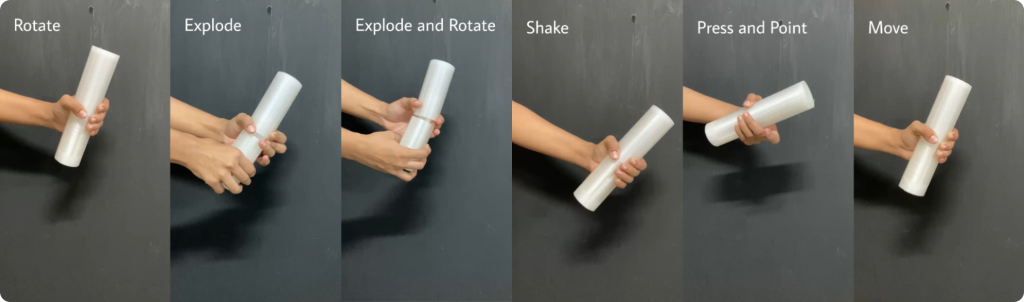

An interaction design project that explores how physical gestures can be used to control and present three-dimensional digital models. We designed a handheld tangible device that translates real-world movements such as twisting, tilting, rotating and opening—into corresponding actions in a 3D environment. The project responds to the growing need for natural, expressive, and embodied ways of interacting with 3D content, like during design presentations.

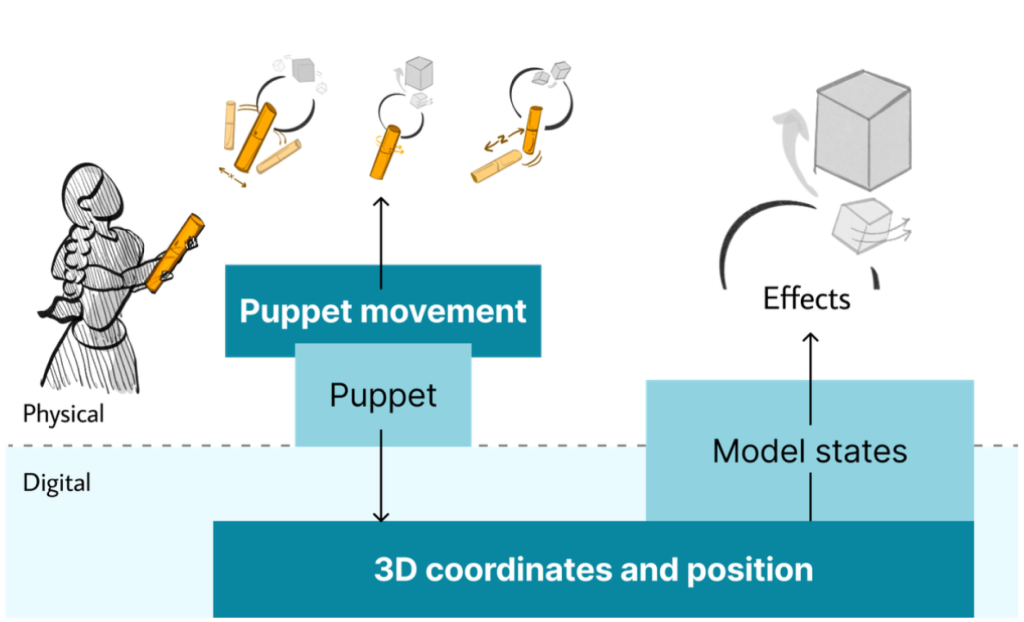

At its core, Puppet serves as a bridge between the physical and digital worlds. Using embedded motion sensors, it allows presenters to manipulate virtual objects by moving the device in space, offering a sense of immediacy and physical presence that traditional input tools lack. The outcome demonstrates how tangible interaction can enrich storytelling, spatial understanding, and performative communication in design.

Background

This project was carried out as part of the M.Des Interaction Design program at IDC School of Design, IIT Bombay. It was a group project involving four members — Me, Utkarsha Kate, Saie Gokhale, and Abhishek Benny. We collaborated over several weeks. Each member took on complementary roles spanning research, prototyping, coding, and visual design, ensuring a multidisciplinary approach to both the physical and digital aspects of the system.

The course encouraged students to explore embodied interaction, tangible user interfaces, and sensor-based interaction, helping them learn how design thinking can extend beyond screens into physical forms of communication. Through this project, the team was expected to learn not just technical integration, but how embodied gestures could enhance expressiveness and engagement in digital experiences.

Strategy

We started with a broad exploration of embodied and tangible interaction concepts. Early ideas involved fractal mapping and interactive wands for controlling narratives through movement. These explorations helped the team identify what felt both expressive and purposeful. The concept evolved into Puppet, a handheld stick that naturally connects gesture with digital manipulation.

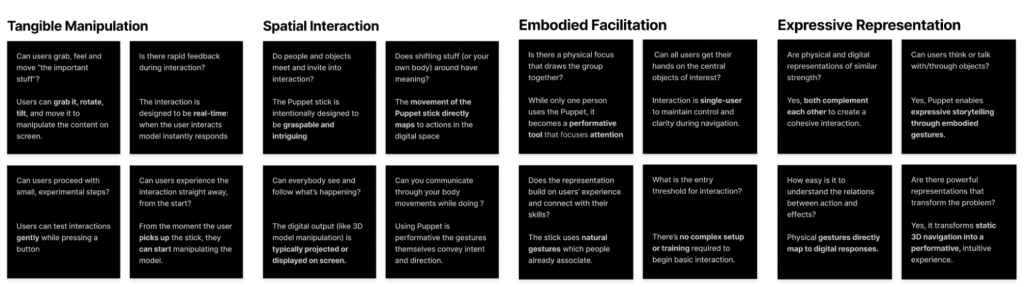

Their approach emphasized iterative prototyping and embodied experimentation. They used frameworks such as Eva Hornecker’s Card Brainstorming Game to generate diverse ideas and think through interaction possibilities. Each iteration tested the relationship between movement, affordance, and meaning—leading to a final design that prioritized playfulness, comfort, and intuitive control. The overarching strategy was to make digital interactions feel more physical, immediate, and emotionally engaging.

Design

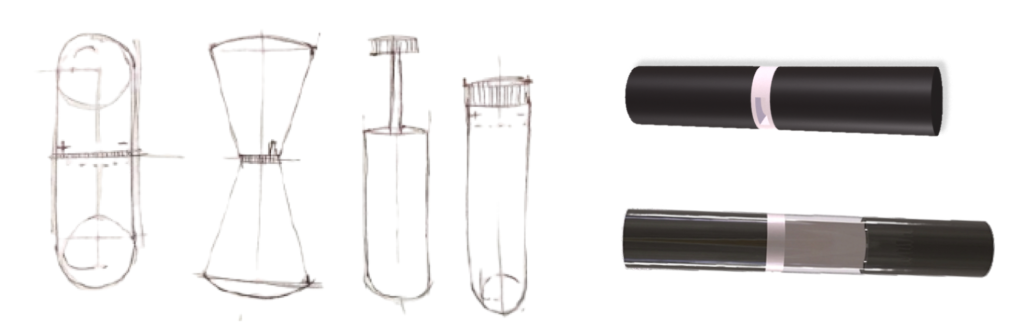

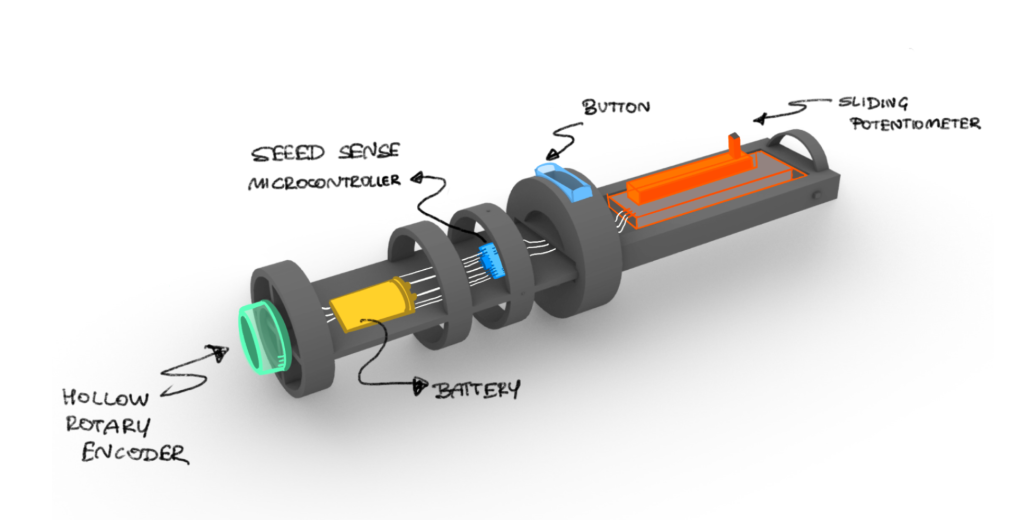

The design process moved through ideation, form exploration, and system implementation. We chose a cylindrical form for the Puppet, as it naturally supports holding, twisting, and pointing gestures. Material and ergonomic studies ensured it felt comfortable and inviting to use. The prototype was 3D printed for testing, with future iterations considering materials like wood or soft-touch rubber for warmth and better tactility.

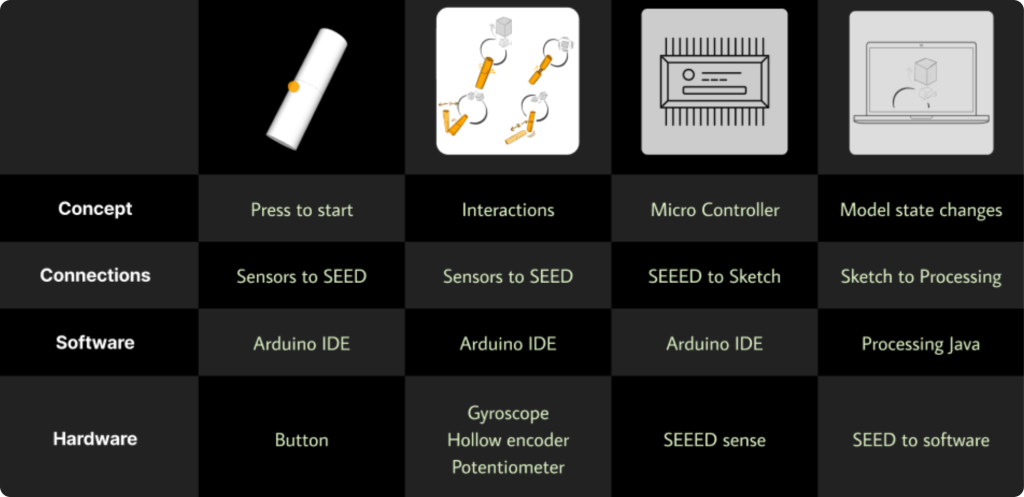

The hardware was built around a Seeed XIAO nRF52840 Sense microcontroller with an integrated IMU (accelerometer and gyroscope) and BLE communication. Additional sensors like a magnetometer, sliding potentiometer, and rotary encoder captured complex gestures. On the software side, sensor data was processed in Arduino and visualized using Processing, allowing real-time model manipulation on screen. Users could rotate, zoom, and explode 3D models through gestures, creating a direct and expressive interaction loop between body and digital space.

The final design embodied the principles of tangible interaction — transforming abstract 3D control into a performative, sensory experience. The result was not just a tool for interaction, but an expressive medium that merged presence, motion, and storytelling.

Introduction Video

A tangible, gesture-driven device that lets you manipulate 3D models naturally through physical movement.

Early iterations

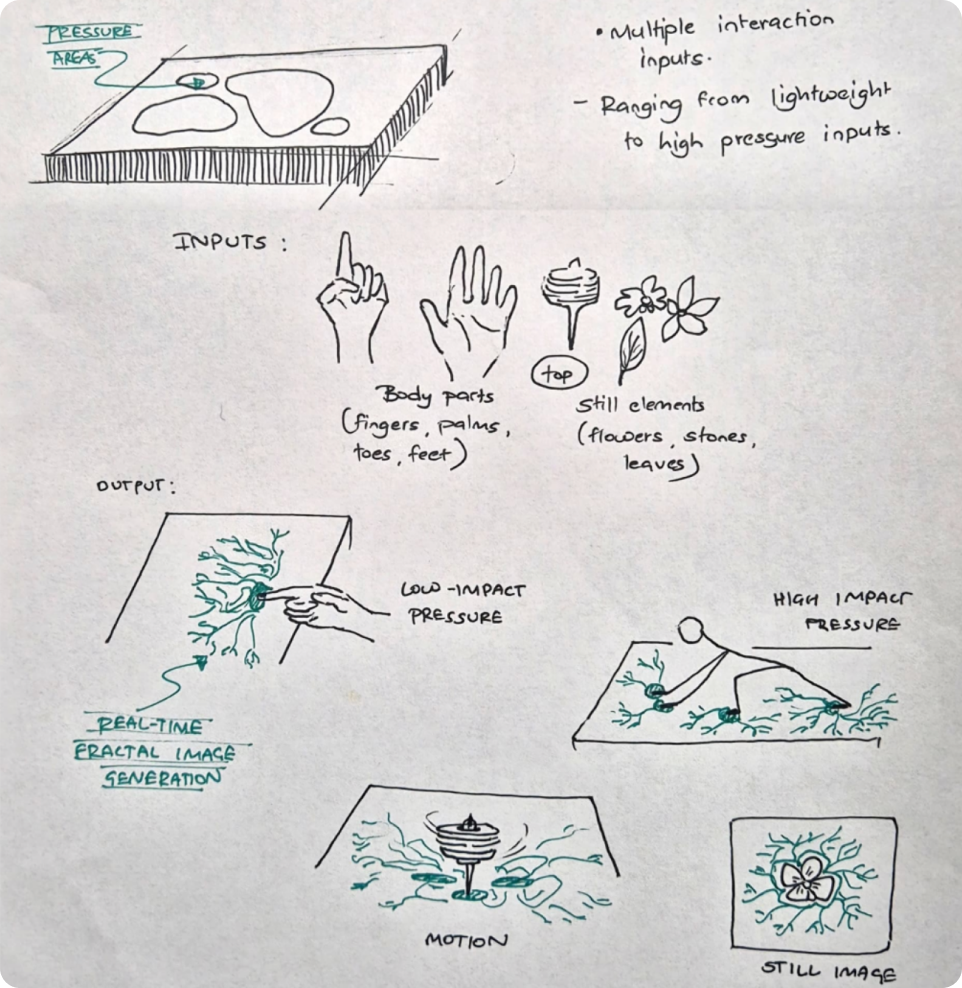

At the beginning of our research, we explored a range of ideas each centered around different sensory and interactive possibilities One concept played with the idea of fractal mapping, where users could use their body parts, still objects, or spinning elements to generate and manipulate visual patterns in real time. It was conceptualized to use anthropocentric imagery by encouraging immersion. It was an expressive way to visualize interaction, but we struggled to define how it could translate into a meaningful experience beyond the aesthetic.

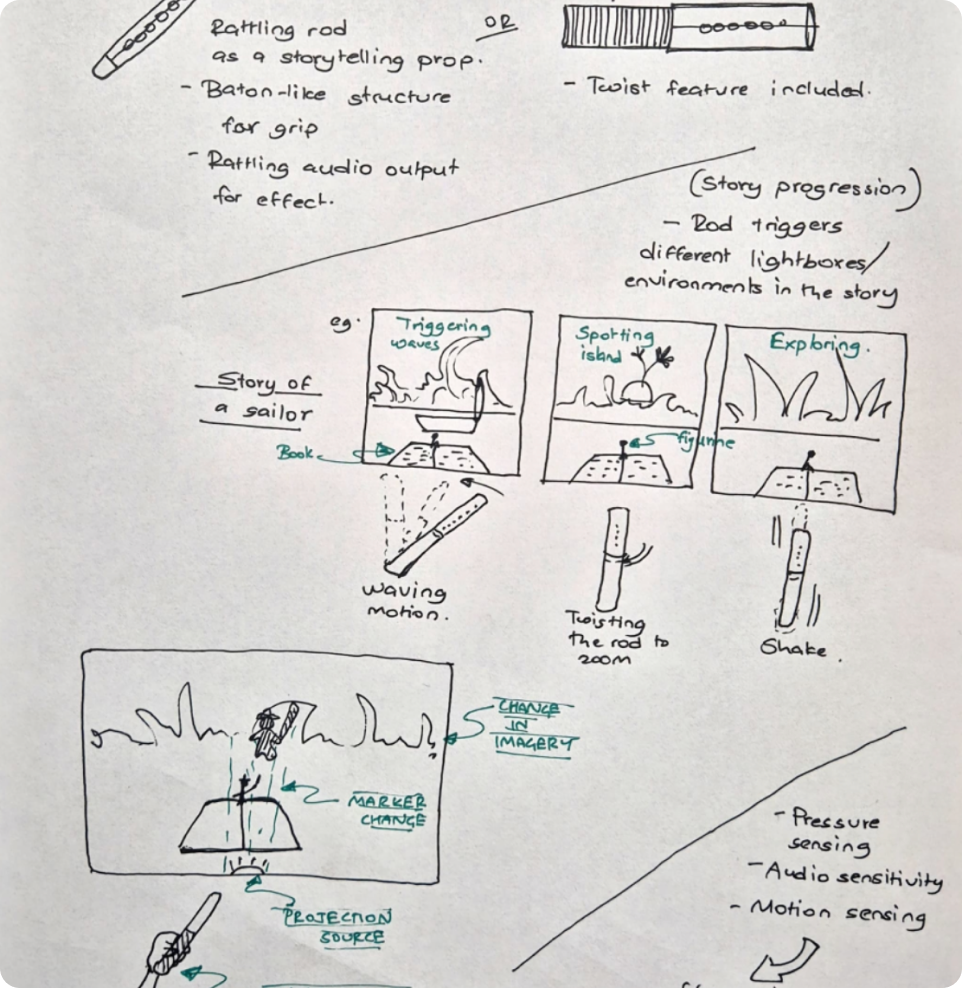

Another idea was an Interactive Wand tool for controlling a narrative, ideally within a holographic or mixed-reality environment. The wand would let users guide a story through movement, gestures, or proximity. While we loved the imaginative quality of this concept, it leaned more toward spectacle than grounded interaction. Hence we tried to take this idea further in different contexts where this wand will be used to control and manipulate 3D objects. Ultimately, these explorations helped shape our understanding of what felt both playful and purposeful. They gave us direction, leading to the more tactile and approachable form that Puppet eventually took.

Final Idea

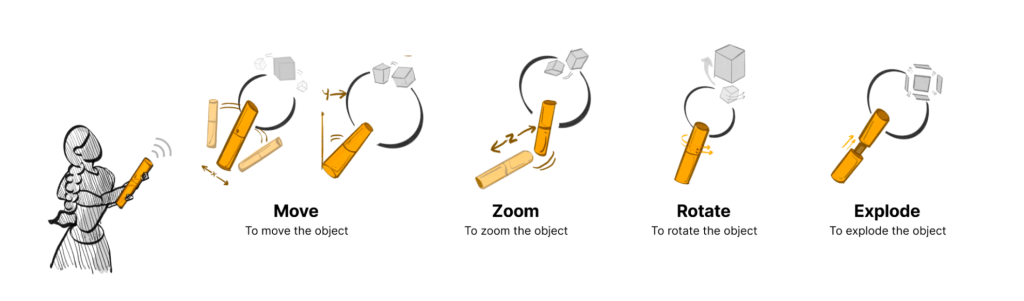

Out of all our early explorations, the idea that resonated most was the Puppet, a handheld stick designed to intuitively bridge the gap between physical movement and digital content.

Acting as a presentation tool, Puppet allows users to engage with 3D models through simple, expressive gestures. By rotating, tilting, or moving the stick in space, users can naturally navigate, zoom, and interact with the digital object on screen. This performative interaction not only enhances control but also adds a layer of presence and storytelling to the act of showcasing digital work. The simplicity and immediacy of Puppet made it feel approachable, while its expressive possibilities pointed toward a richer, more embodied way of interacting with digital spaces.

Conceptual Diagram

Affordances

Form Explorations

While our current prototype is 3D printed, we carefully considered materiality in our design process. Future iterations may explore materials that offer a warmer, more organic feel like wood or soft-touch rubber to further reinforce the sense of presence and enhance physical-digital interaction. The shape and surface together aim to create an object that feels familiar in the hand, yet novel in how it connects users to digital environments.

Card Brainstorming Game framework by Eva Hornecker

Hardware Setup

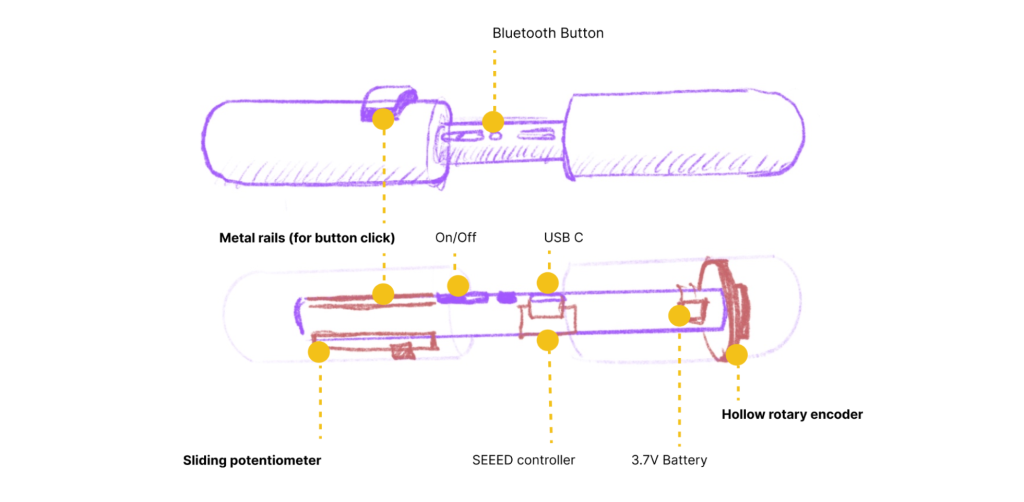

The housing for the components is designed in a stick-like form factor, providing an ergonomic and compact enclosure that ensures ease of handling and intuitive interaction for the user. The housing is constructed from lightweight yet durable materials, such as 3D-printed components for prototyping

Electronic Setup

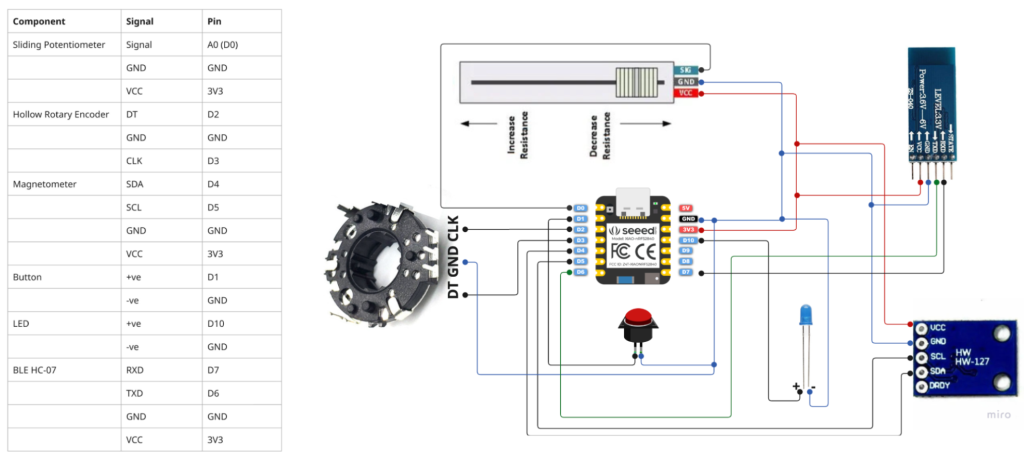

The electronic setup for this project involves several key components: a sliding potentiometer, hollow rotary encoder, magnetometer, Seeed XIAO nRF52840 Sense, LED, and HC-07 Bluetooth module.

The sliding potentiometer is used for detecting linear movement or position, providing analog input to control specific parameters in the system. The hollow rotary encoder tracks rotational movement, offering precise control over rotational inputs, ideal for adjusting settings or controlling an object’s rotation in the virtual environment.

The magnetometer helps in detecting the orientation of the device, adding an extra layer of accuracy to the motion tracking alongside the accelerometer and gyroscope in the Seeed XIAO nRF52840 Sense, which serves as the core microcontroller. This microcontroller integrates a 6-DOF IMU (gyroscope and accelerometer) for capturing the rotational and translational motion of the controller.

The LED serves as a visual feedback mechanism, indicating the operational status or feedback of the system.

The HC-07 Bluetooth module facilitates wireless communication with a host device, sending data from the sensors to a web application via Bluetooth.

This setup combines various sensors and communication modules to create a comprehensive, interactive system for controlling a 3D object in a virtual environment using physical movements and sensor data.

Possible Use Cases

Originally developed as a tool for presenting 3D models, the Puppet’s design revealed far greater potential as development progressed. Its ability to translate natural hand gestures like twisting, sliding, and rotating into digital actions opens up a wide range of applications across creative, educational, and professional domains.

In interactive storytelling, the Puppet can act as a physical interface to guide and reveal narratives. A twist might change the scene, a slide could unveil a hidden element, offering an intuitive and engaging way to interact with digital content. This kind of tangible control could be particularly impactful in museum installations, educational exhibits, or immersive learning environments, where audience engagement is key. The Puppet can be introduced in gaming and virtual exploration, as physicality is often missing from traditional input devices. Rather than pressing buttons, players could navigate and interact with digital environments through embodied gestures, adding a sense of playfulness and immersion that feels more immediate and expressive. In more technical fields like automobile design, architecture, or medical education, the Puppet enables more intuitive manipulation of complex 3D data. Designers could rotate, zoom, or deconstruct digital models during presentations or design reviews. Medical students could explore anatomical models in an interactive, layered way that supports spatial understanding and active learning.

What distinguishes the Puppet is not just its versatility, but the way it grounds digital interaction in physical movement. By bridging the gap between the hand and the screen, it fosters a deeper connection with the content; making control more intuitive, more expressive, and ultimately, more human.

Watch again

Project Details

Puppet

Guided by

Prof. Venkatesh R.

Prof. Jayesh Pillai

Collaborators

Utkarsha Kate

Saie G

Abhishek Benny

Interaction Design

Tangible User Interfaces

Background

This project was carried out as part of the M.Des Interaction Design program at IDC School of Design, IIT Bombay. It was a group project involving four members — Me, Utkarsha Kate, Saie Gokhale, and Abhishek Benny. We collaborated over several weeks. Each member took on complementary roles spanning research, prototyping, coding, and visual design, ensuring a multidisciplinary approach to both the physical and digital aspects of the system.

The course encouraged students to explore embodied interaction, tangible user interfaces, and sensor-based interaction, helping them learn how design thinking can extend beyond screens into physical forms of communication. Through this project, the team was expected to learn not just technical integration, but how embodied gestures could enhance expressiveness and engagement in digital experiences.

Strategy

We started with a broad exploration of embodied and tangible interaction concepts. Early ideas involved fractal mapping and interactive wands for controlling narratives through movement. These explorations helped the team identify what felt both expressive and purposeful. The concept evolved into Puppet, a handheld stick that naturally connects gesture with digital manipulation.

Their approach emphasized iterative prototyping and embodied experimentation. They used frameworks such as Eva Hornecker’s Card Brainstorming Game to generate diverse ideas and think through interaction possibilities. Each iteration tested the relationship between movement, affordance, and meaning—leading to a final design that prioritized playfulness, comfort, and intuitive control. The overarching strategy was to make digital interactions feel more physical, immediate, and emotionally engaging.

Design

The design process moved through ideation, form exploration, and system implementation. We chose a cylindrical form for the Puppet, as it naturally supports holding, twisting, and pointing gestures. Material and ergonomic studies ensured it felt comfortable and inviting to use. The prototype was 3D printed for testing, with future iterations considering materials like wood or soft-touch rubber for warmth and better tactility.

The hardware was built around a Seeed XIAO nRF52840 Sense microcontroller with an integrated IMU (accelerometer and gyroscope) and BLE communication. Additional sensors like a magnetometer, sliding potentiometer, and rotary encoder captured complex gestures. On the software side, sensor data was processed in Arduino and visualized using Processing, allowing real-time model manipulation on screen. Users could rotate, zoom, and explode 3D models through gestures, creating a direct and expressive interaction loop between body and digital space.

The final design embodied the principles of tangible interaction — transforming abstract 3D control into a performative, sensory experience. The result was not just a tool for interaction, but an expressive medium that merged presence, motion, and storytelling.